Why do translation programmes or chatbots on our computer systems typically include discriminatory tendencies in the direction of gender or race? Right here is a simple information to know how bias in pure language processing works. We clarify why sexist applied sciences like serps should not simply an unlucky coincidence.

What’s bias in translation programmes?

Have you ever ever used machine translation for translating a sentence to Estonian? In some languages, like Estonian, pronouns and nouns don’t point out gender. When translating to English, the software program has to choose. Which phrase turns into male and which feminine? Nevertheless, typically it’s a alternative grounded in stereotypes. Is that this only a coincidence?

These sorts of methods are created utilizing giant quantities of language information – and this naturally occurring language information incorporates biases: systematic and unfair discrimination in opposition to sure people or teams of people in favour of others. The way in which these methods are created may even amplify these pre-existing biases. On this weblog publish we ask the place such distortions come from and if there’s something we will do to scale back it?

We’ll begin with a technical clarification of how particular person phrases from giant quantities of language information are reworked into numerical representations – so-called embeddings – in order that computer systems could make sense of them. This isn’t to bore you however to clarify that bias in phrase embeddings isn’t any coincidence however moderately a logical byproduct. We’ll then talk about what occurs when biased embeddings are utilized in sensible purposes.

Phrase embeddings: what, why, and the way?

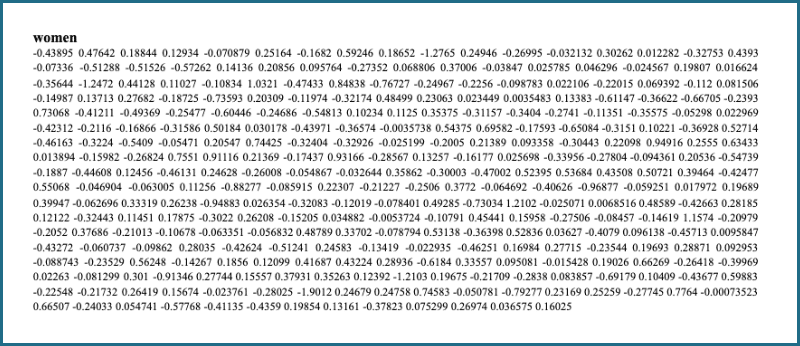

Phrase embeddings are merely lists of numbers. While you search Google for “ladies”, what this system sees is a listing of numbers and the outcomes are based mostly on calculations on these numbers. Computer systems can’t course of phrases instantly. And that is why we want embeddings.

Determine 2: Numerical illustration or phrase embedding of the phrase “lady”. It’s nothing however an extended listing, or vector, of numbers.

The primary aim is to remodel phrases into numbers such that solely a minimal of data is misplaced. Take the next instance. Every phrase is represented by a single quantity.

Nevertheless, we will seize much more info. As an illustration, “nurse”, “physician”, and “hospital” are someway comparable. We would need similarity to be represented within the embeddings, too. And that is why we use extra refined strategies.

Phrase embeddings and Machine Studying

We’ll give attention to a specific embedding methodology referred to as GloVe. GloVe falls into the class of machine studying. Because of this we don’t manually assign numbers to a phrase like we did earlier than however moderately be taught the embeddings on a big corpus of language (and by giant we imply billions of phrases).

The fundamental thought is commonly expressed by the phrase: “You shall know a phrase by the corporate it retains”. This merely signifies that the context of a phrase says a lot about its which means. Take the earlier sentence once more. If we had been to masks one of many phrases, you can nonetheless roughly guess what it’s: “The ___ and the physician work on the hospital.” This concept types the muse for GloVe and its algorithm consists of three steps.

- First, we sweep a window over a sentence from our language corpus. The scale of the window is as much as us and is dependent upon how massive we would like the encircling context of a phrase to be. There have been experiments that with a window dimension of two (like under) “Hogwarts” is stronger related to different fictional faculties like “Sunnydale” or “Evernight” however with a dimension of 5 it’s related to phrases like “Dumbledore” or “Malfoy”.

- Second, we rely the co-occurrences of the phrases. By co-occurrence we imply the phrases which seem in the identical window because the given phrase. “Nurse”, for instance, co-occurs with “and” and twice with “the”. The end result will be displayed in a desk.

- The third step is the precise studying. We gained’t get into element as a result of this may require plenty of maths. Nevertheless, the essential thought is easy. We calculate co-occurrence possibilities. Phrases that co-occur typically, akin to “physician” and “nurse”, get a excessive likelihood and unlikely pairs like “physician” and “elephant” get a low likelihood. Lastly, we assign the embeddings in keeping with the chances. The embedding for “physician” and the one for “nurse”, as an illustration, could have some comparable numbers and they are going to be fairly completely different to the numbers for “elephant”.

That’s it! These are all steps wanted to create GloVe embeddings. All that was achieved was to swipe a window over hundreds of thousands of sentences, rely the co-occurrences, calculate the chances, and assign the embeddings.

While GloVe shouldn’t be used anymore in most trendy applied sciences, and has been changed by barely extra superior strategies, the underlying ideas are the identical: similarity (and which means) are assigned based mostly on co-occurrence.

Bias in Pure Language Processing: Is it only a fantasy?

However again to the unique subject: what’s it about this methodology that makes it so weak to biases? Think about that phrases like “engineer” typically co-occur with “man” or “nurse” with “lady”. GloVe will assign robust similarity to these pairs, too. And this can be a drawback. To emphasize this level: bias in phrase embeddings isn’t any coincidence. The exact same mechanism that offers them the flexibility to seize which means of phrases can be answerable for gender, race and different biases.

Bias in embeddings is definitely not cool. However is it merely a theoretical drawback or are there additionally real-world implications? Effectively, listed here are some examples to persuade you of the latter.

Phrase embeddings represent the enter of most trendy methods, together with many ubiquitous applied sciences that we use each day. From serps to speech recognition, translation instruments to predictive textual content, embeddings kind the muse of all of those. And when the muse is biased, there’s a good likelihood that it spreads to your complete system.

Male-trained phrase embeddings gas gender bias: It’s actual life

While you kind “machine studying” right into a search engine, you’ll get hundreds of outcomes – too many to undergo all of them. So it’s essential rank them by relevance. However how do you identify relevance? Because it has been proven, one environment friendly means is to make use of phrase embeddings and rank by similarity. Outcomes that embody phrases much like “machine studying” will get greater rankings. Nevertheless, what has been proven, too, is that embeddings are sometimes gender biased. Say, you seek for “Laptop science PhD scholar at Humboldt College”. What may occur is that the webpages of male PhD college students shall be ranked greater as a result of their names are stronger related to “pc science”. And this in flip reduces the visibility of ladies in pc science.

Possibly it’s no shock that these phrase embeddings develop as much as be biased, given what they’re fed with. The coaching information of GPT-2, one of many greatest language fashions, is sourced by scraping outbound hyperlinks from Reddit, which is principally populated by males between the ages of 18 and 29. Nevertheless, there are additionally embeddings which were educated on Wikipedia – a seemingly unbiased supply – and but they grow to be biased. This is likely to be as a result of solely about 18% of biographies on (English) Wikipedia are about ladies and solely 8–15% of contributors are feminine. Fact is, discovering giant balanced language information units is a reasonably robust job.

We may go on and go on and go on and go on with examples however allow us to now flip to one thing extra optimistic: options!

So what can we do in opposition to bias in Pure Language Processing?

So, the dangerous information: language-based expertise is commonly racist, sexist, ableist, ageist… the listing goes on. However there’s a faint glimmer of some excellent news: we will do one thing about it! Even higher information: consciousness that these issues exist is already step one. We are able to additionally try to nip the issue within the bud, and attempt to create a dataset that incorporates much less bias from the offset.

As that is nigh on unimaginable, as linguistic information will at all times embody pre-existing biases, an alternate is to correctly describe your dataset – that means, each analysis and business can be sure that they solely use acceptable datasets and may assess extra completely what affect utilizing a sure dataset in a sure context could have.

On the technical stage, varied methods have been proposed to scale back bias within the precise system. A few of these methods contain judging the appropriateness of a distinction, for instance, the gender distinction between ‘mom’ vs. ‘father’ is suitable, whereas ‘homemaker’ vs. ‘programmer’ moderately inappropriate. The phrase embeddings for these inappropriate phrase pairs are then adjusted accordingly. How profitable these strategies truly are in observe is debatable.

Mainly, the individuals who could also be affected by a expertise – significantly these affected adversely – must be on the centre of analysis. This additionally entails consulting with all potential stakeholders in any respect levels of the event course of – the sooner the higher!

Watch your information and take a look at participation

Biases that exist in our society are mirrored within the language we use; that is additional mirrored and may even be amplified in applied sciences which have language as a foundation. At least, applied sciences which have important outcomes – akin to a system that routinely decides whether or not or to not grant loans – must be designed in a participatory method, from the start of the design course of to the very finish. Simply because folks will be discriminatory, this doesn’t imply that computer systems must be too! There are instruments and strategies to lower the consequences and we will even use phrase embeddings to do analysis on bias. So: concentrate on what information you might be utilizing, check out technical de-biasing strategies and at all times maintain varied stakeholders within the loop.