Behind Meta’s AI Mannequin, LLama, lies spectacular energy and massive potential. LLama 2 parameters energy a variety of options that give the AI Mannequin the potential to additional push the boundaries of machine studying. It represents a big development within the area of synthetic intelligence and provides companies and builders a wealth of alternatives for personalization and optimization. On this article, let’s take a better have a look at the LLama-2 mannequin and its parameters and discover its thrilling options and capabilities.

What’s LLaMa 2 and what are the parameters?

ImageFlash

ImageFlash

LLama 2 is an intriguing AI Language Mannequin developed by Meta and based mostly on an in depth configuration of parameters. The LLama 2 parameters play a vital position within the efficiency of this mannequin. Meta has gone to nice lengths to make sure the mannequin is optimally skilled and achieves the specified outcomes.

One of many key elements in creating LLama 2 is the massive quantity of information used for coaching. By accessing varied sources and articles, the mannequin was capable of construct a complete and numerous information base. LLama 2’s enter system has been fastidiously designed to permit straightforward and environment friendly interplay. It permits customers to introduce textual content within the type of particular person sentences, paragraphs, or complete paperwork, after which generates applicable responses.

One other essential side is the tokens that function the idea for the mannequin. LLama 2, attributable to its configuration, works with numerous tokens to permit detailed and exact era of texts. The important thing parameters, such because the language used, the dimensions of the mannequin, in addition to completely different instruments, have a decisive influence on the efficiency of LLama 2.

An thrilling side of LLama 2 is that it compares very properly to different language fashions. Meta’s intensive preparatory work in analysis and growth has made it doable to create a mannequin that achieves wonderful ends in many areas. A large number of check runs and comparisons exhibits that LLama 2 performs outstandingly by way of textual content era, relevance and coherence.

How was the LLaMa 2 mannequin skilled by Meta?

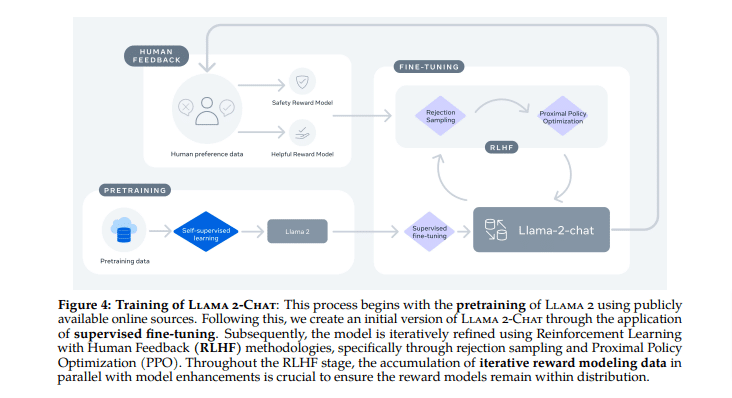

LLama-2 is a formidable language mannequin developed by Meta. However how was this mannequin really skilled? To coach LLama-2, Meta used quite a lot of parameters that play an essential position within the mannequin’s efficiency.

Meta

Meta

- Information assortment: To start with, varied knowledge sources had been used to feed LLama-2 with enough textual content materials. Texts from the Web, scientific articles, information and lots of different sources of data had been used to supply the mannequin with the broadest doable information base. This knowledge was totally processed and introduced into an appropriate format in order that it might be fed into the mannequin.

- Tokens: One other essential side of the coaching course of is the tokens used. A token might be seen as a type of constructing block that provides the mannequin the flexibility to grasp and generate textual content. By cleverly configuring the tokens, the mannequin was capable of study to higher perceive the connections between phrases and sentences and to generate extra exact solutions.

- Capability adjustment: For the coaching, it was additionally ensured that the mannequin had entry to enough computing capability. LLama-2 is a big mannequin and to be able to obtain the absolute best efficiency, the pc sources have to be adjusted accordingly. Meta used specifically developed instruments and high-performance {hardware} to make sure quick and environment friendly processing of the info.

- Superb tuning: The coaching parameters have been fastidiously coordinated to attain the absolute best end result. Meta has performed intensive analysis to search out the correct parameter values that can permit LLama-2 to attain excessive efficiency. Explicit consideration was paid to the language points and the language patterns that the mannequin ought to study.

Total, Meta has put quite a lot of effort into efficiently coaching the LLama-2 mannequin. By fastidiously choosing and configuring the parameters, utilizing completely different knowledge sources and optimizing the coaching processes, they managed to develop a formidable language mannequin able to producing numerous and exact solutions.

Click on right here to learn the way to make use of LLama 2.

Welche Parameters und Fähigkeiten hat LLaMa-2?

So as to perceive the core features of LLama-2, it’s worthwhile to take a better have a look at a number of the most essential LLama 2 parameters. Total, the various parameters of LLama-2 permit for a wealthy and highly effective language mannequin. With its exact adaptation, the processing of enormous quantities of information and its advanced construction, LLama-2 clearly stands out from different language fashions. It’s a formidable device in textual content era and permits for quite a lot of makes use of in areas resembling article writing, chatbots, and different text-based instruments.

Meta

Meta

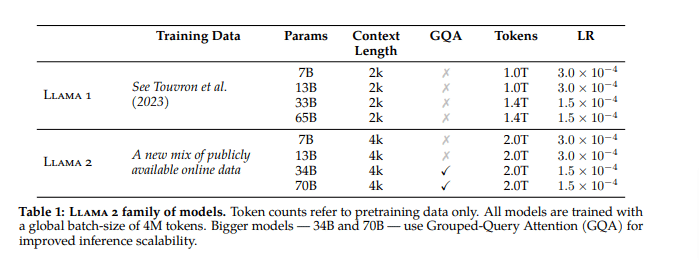

Right here you’ll be able to see all the primary elements, together with the parameter measurement, about Llama2:

- Mannequin: Meta releases a number of fashions together with Llama base with 7, 13, 34 and 70 billion parameters and a Llama chat variant with the identical sizes. Meta elevated the dimensions of the pretraining corpus by 40%, doubled the context size of the mannequin (to 4k) and launched grouped question consideration (Ainslie et al., 2023).

- Is it open supply: Technically the mannequin shouldn’t be open supply as its growth and utilization shouldn’t be absolutely open to most of the people. It’s nonetheless helpful for the open supply neighborhood, however solely an open launch / open innovation [more on that here].

- Capabilities: Intensive benchmarking outcomes and for the primary time I’m satisfied that an open supply mannequin is on ChatGPT’s degree (apart from coding).

- Value: Excessive budgets and dedication (e.g. estimated value of round $25 million for desire knowledge assuming market costs), very giant crew. The bases for growing a basic mannequin are so intensive.

- Code / Math / Reasoning: There’s not a lot dialogue within the paper about code knowledge and the RLHF course of. For instance, with 15 billion parameters, StarCoder beats one of the best mannequin with 40.8 for HumanEval and 49.5 MBPP (Python).

- Consistency throughout a number of requests: New technique to make sure consistency throughout a number of requests – Ghost Consideration (GAtt) impressed by Context Distillation. These strategies are sometimes workarounds to enhance the mannequin’s efficiency till we higher perceive the right way to practice fashions based on our wants.

- Reward Fashions: Makes use of two reward fashions to keep away from the security/helpfulness trade-off recognized at AI firm Anthropic.

- Information Management: A lot dialogue about distribution management (as I stated, that is essential for RLHF). That is very troublesome to breed.

- RLHF Course of: Makes use of a two-step RLHF strategy, beginning with Rejection Sampling after which Rejection Sampling + Proximal Coverage Optimization (PPO). Stresses the intense significance of RLHF and that the “wonderful writing expertise of LLMs are considerably influenced by RLHF”.

- Era: There’s a want to regulate the temperature parameter relying on the context (e.g. artistic duties require a better temperature, see Part 5 / Fig. 21).

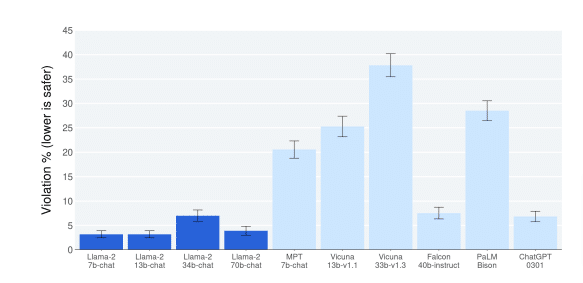

- Safety/Harm Assessments: Very, very intensive safety assessments (almost half of the paper) in addition to detailed Context Distillation and RLHF for safety functions. The outcomes aren’t excellent and have gaps, nevertheless it’s a step in the correct path.

How does LLaMa 2 evaluate to different AI fashions like ChatGPT?

Meta

Meta

When it comes to efficiency in comparison with different language fashions, Llama 2 can outperform many fashions. Because of superior parameter configuration and intensive coaching, Llama 2 demonstrates spectacular textual content era and comprehension capability. Llama 2 can absolutely exploit its strengths, particularly in relation to advanced duties resembling ChatGPT or entry to sure sources of data.

The bottom mannequin appears to be very highly effective (past GPT-3), and the fine-tuned chat fashions appear to be on par with ChatGPT. This can be a main step ahead for open supply and a significant blow to the closed supply distributors, as utilizing this mannequin provides firms way more customization choices and considerably decrease prices.

Total, Llama 2 delivers a strong and versatile language mannequin that achieves wonderful outcomes in comparison with different fashions attributable to its particular parameters and intensive coaching. It provides customers efficient entry to numerous textual knowledge and gives instruments for exact management of the mannequin. Thanks to those properties, Llama 2 can efficiently assist a variety of functions and is a notable choice on this planet of language fashions.

One of the best LLaMa 2 different: ChatFlash!

Are you in search of a strong German chatbot with the newest GPT expertise, or an AI resolution that provides you much more versatility? Then check ChatFlash now!

It’s doable to direct and affect the output of the magic pen in a focused method through personalities. neuroflash additionally provides optimized prompts with templates, that are tailored to all kinds of functions and can be utilized freely.

Templates: Get impressed by the massive choice of textual content templates to get began even sooner. Decide what sort of textual content you need to generate with ChatFlash and obtain direct recommendations for an appropriate immediate.

Templates: Get impressed by the massive choice of textual content templates to get began even sooner. Decide what sort of textual content you need to generate with ChatFlash and obtain direct recommendations for an appropriate immediate.

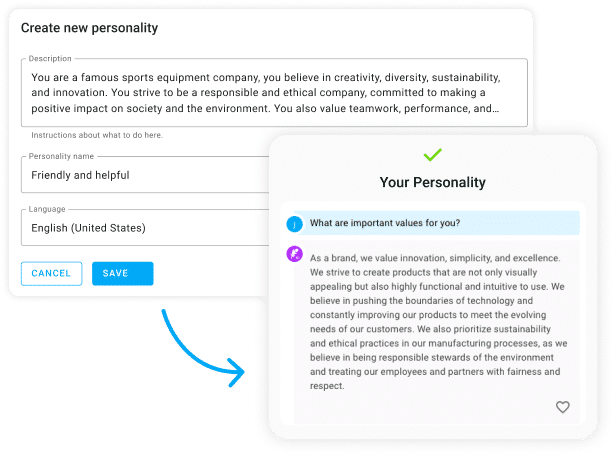

Personalities: You specify who the magic feather must be. Personalities can help you customise the scope of the chat for much more related and focused outcomes. The output generated by ChatFlash is intently associated to the chosen character and adapts to the context of the dialog.

Personalities: You specify who the magic feather must be. Personalities can help you customise the scope of the chat for much more related and focused outcomes. The output generated by ChatFlash is intently associated to the chosen character and adapts to the context of the dialog.

A character defines the next:

- tone of the dialog

- position (operate)

- character, model

- context of the anticipated response

You may select from completely different personalities. For instance, ChatFlash can reply as an web optimization guide, social media influencer, journalist or writing coach. As well as, we give you the chance so as to add your personal personalities. For instance, you’ll be able to adapt ChatFlash to your organization id or your private writing type. We’ll present you ways:

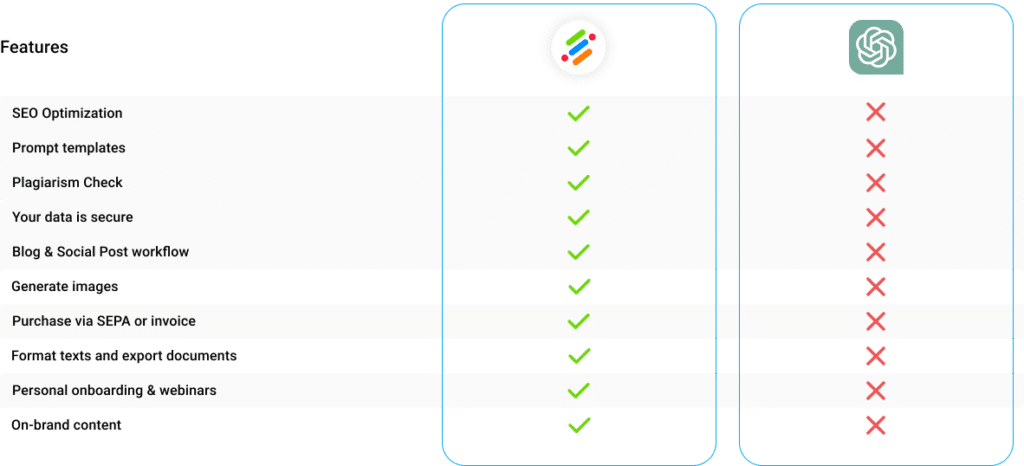

Lastly, neuroflash provides you quite a lot of different features with which you’ll additional edit texts. Numerous workflows and extra options resembling web optimization evaluation, a browser extension and an AI picture generator additionally provide nice added worth for everybody who wants texts for skilled functions.

Use ChatFlash at no cost

Use ChatFlash at no cost

Steadily Requested Questions

How large is Llama 2 70B?

Listed below are the sizes of the completely different Llama fashions:

- Llama 1: Measurement 65B

- Llama 2: Measurement 7B

- Llama 2: Measurement 13B

- Llama 2: Measurement 70B

These numbers counsel the relative scale and efficiency of various variations of the Llama mannequin, with the bigger fashions usually having greater MMLU scores. It’s price noting that the MMLU metric measures the mannequin’s understanding of prompts on a scale from 0 to 100, the place greater scores point out a greater understanding.

What tokenizer does Llama use?

Llama makes use of a SentencePiece Byte-Pair Encoding (BPE) tokenizer. This tokenizer is particularly designed for Llama fashions and shouldn’t be confused with the tokenizers utilized by OpenAI fashions.

Conclusion

In conclusion, Meta’s LLama-2 is a formidable language mannequin based mostly on intensive analysis and cutting-edge applied sciences. The LLama-2 parameters play a vital position on this, as a result of they decide the efficiency and precision of the mannequin. By accurately configuring the parameters, the mannequin might be optimally tailored to the respective necessities.

Total, LLama-2 is a strong and versatile language mannequin that boasts spectacular efficiency and precision. With the correct parameters, the mannequin might be optimally tailored to particular person necessities. Whether or not for analysis functions, journalistic articles or chat GPT, LLama-2 represents a priceless supply to generate high-quality textual content. With its superior language capabilities and broad scope, LLama-2 is unquestionably a mannequin price exploring.