Introduction to Lengthy Brief Time period Reminiscence (LSTM)

Sequence prediction in knowledge science challenges often contain using Lengthy Brief Time period Reminiscence (LSTM) networks. These kinds of recurrent Neural Networks can study order dependence. Throughout the present step of the RNN, the output of the earlier step is used because the enter to the present step. Hochreiter and Schmidhuber are chargeable for creating the Lengthy – Brief Time period Reminiscence. It addressed the problem of “long-term reliance” on RNNs, the place RNNs are unable to foretell phrases saved in long-term reminiscence however they will make extra correct predictions primarily based on info within the present knowledge. A rising hole size is not going to have a constructive affect on RNN’s efficiency. LSTMs are recognized to carry info for a very long time by default. This system is used within the processing of time-series knowledge, in prediction, in addition to in classification of information.

What’s LSTM

Within the discipline of synthetic intelligence (AI) and deep studying, LSTMs are lengthy short-term reminiscence networks that use synthetic neural networks. These networks have suggestions connections versus customary feed-forward neural networks often known as recurrent neural community. LSTM is relevant to duties corresponding to unsegmented, linked handwriting recognition, speech recognition, machine translation, robotic management, video video games, and healthcare.

Recurrent neural networks can course of not solely particular person knowledge factors (corresponding to photographs), but additionally complete sequences of information (corresponding to speech or video). With time sequence knowledge, lengthy – brief time period reminiscence networks are nicely suited to classifying, processing, and making predictions primarily based on knowledge, as there could also be lags of unknown length between essential occasions in a sequence. The LSTMs have been developed as a way to deal with the issue of vanishing gradients that’s encountered when coaching conventional RNNs. It’s the relative insensitivity of LSTMs to hole size, which makes them superior to RNNs, hidden Markov fashions and different sequence studying strategies in lots of functions.

It’s theoretically attainable for traditional RNNs to maintain monitor of arbitrary long-term dependencies within the sequences of inputs. The issue with vanilla RNNs is that they don’t apply for sensible causes. As an illustration, when coaching a vanilla RNN utilizing back-propagation, the long-term gradients within the back-propagated networks are likely to disappear (that’s, cut back to zero) or explode (create infinite gradients), relying on the computations concerned within the course of, which make use of a finite-precision quantity set. As lengthy – brief time period reminiscence models permit gradients to additionally circulate unchanged, it’s partially attainable to resolve the vanishing gradient drawback utilizing RNNs utilizing lengthy – brief time period reminiscence models. Regardless of this, it has been proven that lengthy – brief time period reminiscence networks are nonetheless topic to the exploding gradient drawback.

Additionally Learn: Methods to Use Linear Regression in Machine Studying.

Construction of LSTM

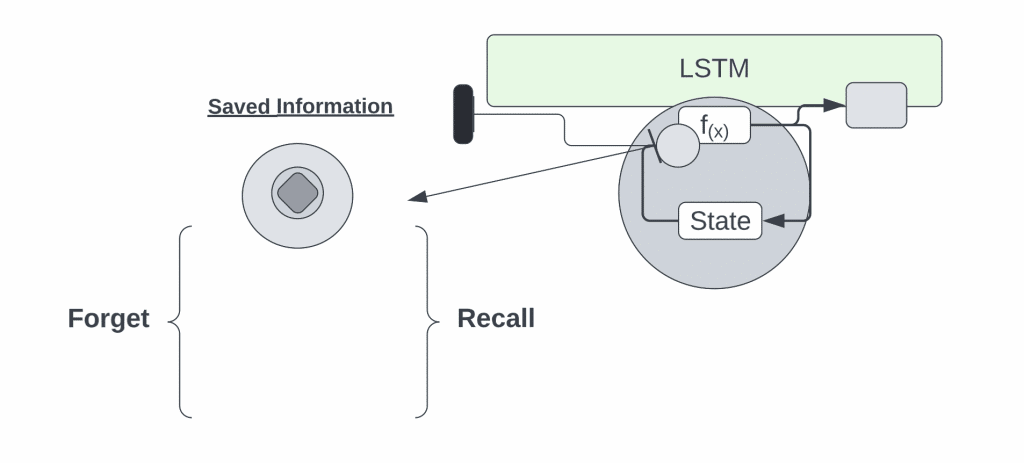

The lengthy – brief time period reminiscence is comprised of 4 neural networks and quite a few reminiscence blocks, or cells, that type a series construction. There are 4 parts in a traditional lengthy – brief time period reminiscence unit: a cell, an enter gate, an output gate, and a overlook gate. There are three gates that management the circulate of data into and out of the cell, and the cell retains monitor of values over an arbitrary time period. There are various functions of lengthy – brief time period reminiscence algorithms within the evaluation, categorization, and prediction of time sequence of unsure length.

Typically, a lengthy – brief time period reminiscence construction is comprised of a cell, an enter gate, an output gate, and a overlook gate. This cell retains values over arbitrary time intervals, and the three gates are chargeable for controlling the circulate of data into and out of the cell.

- Enter Gates: These gates determine which of the values from the inputs is for use to alter the reminiscence. The sigmoid perform determines whether or not or to not permit 0 or 1 values by way of. As well as, utilizing the tanh perform, you may assign weights to the info, figuring out their significance on a scale of -1 to 1.

- The overlook gate: finds all the small print that must be faraway from the block, after which removes them. These particulars are decided by a sigmoid perform. For every quantity within the cell state Ct-1, it appears to be like on the previous state (ht-1) and the content material enter (Xt) and produces a quantity between 0 (Exclude) and 1 (Embrace).

- Output Gate: The output of the block is decided by the enter and reminiscence of the block. When the sigmoid perform is used, it determines whether or not the 0 or 1 worth must be allowed by way of. As well as, the tanh perform determines which values are allowed to go by way of 0, 1. As for the tanh perform, it assigns weight to the offered values by evaluating their relevance on a scale of -1 to 1 and multiplying it with the sigmoid output.

The recurrent neural community makes use of long-short-term reminiscence blocks to supply context for the way inputs and outputs are dealt with within the software program. That is primarily because of the reality this system makes use of a construction that’s primarily based on short-term reminiscence processes as a way to construct a longer-term reminiscence, so the unit is known as a protracted short-term reminiscence block. There may be an in depth use of those techniques in pure language processing.

Supply: YouTube

Not like a recurrent neural community, the place a single phrase or phoneme is evaluated in context of others in a string, the place reminiscences can help within the filtering and categorization of sure forms of knowledge, a recurrent neural community makes use of a short-term reminiscence block. Lengthy – brief time period reminiscence is a widely known and broadly used thought in recurrent neural networks.

Additionally Learn: 50 AI Phrases You Ought to Know.

Lengthy Brief Time period Reminiscence (LSTM) Networks

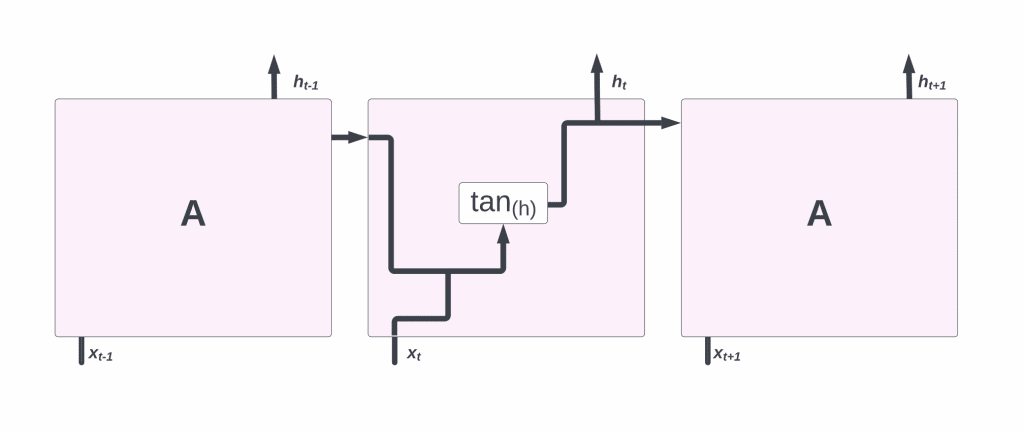

A recurrent neural community consists of a sequence of repeating modules. In conventional RNNs, this repeating module could have a easy construction corresponding to a single tanh layer.

Recurrence happens when the output of the present time step turns into the enter for the subsequent time step, which is known as Recurrent. Throughout every step of the sequence, the mannequin examines not simply the present enter, but additionally the earlier inputs whereas evaluating them to the present one.

A single layer exists within the repeating module of a traditional RNN:

In LSTMs, the repeating module is made up of 4 layers that work together with one another. In LSTMs, the reminiscence cell state is represented by the horizontal line on the prime of the diagram. Some features of the cell resemble these of a conveyor belt. There are just a few linear interactions alongside your complete chain. It’s simple for knowledge to easily journey down it with out being altered.

Within the lengthy – brief time period reminiscence, info could be deleted or added to the cell state, which is fastidiously managed by buildings known as gates. Gating permits info to go by way of selectively. Along with sigmoid neural nets, they function point-wise multiplication.

Integers between 0 and 1 characterize how a lot of every element can go by way of the sigmoid layer. When the worth is zero, “nothing” must be allowed by way of, whereas when the worth is one, “every thing” must be allowed by way of. An LSTM fashions include three of those gates to guard and regulate the cell state.

Additionally Learn: Sensible Farming utilizing AI and IoT.

LSTM Cycle

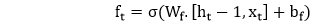

A protracted – brief time period reminiscence cycle is split into 4 steps, a overlook gate is utilized in one of many steps to establish info that must be forgotten from a previous time step. Enter gate and Tanh are used to assemble new info for updating the state of the cell. This info is used to replace the cell’s state. The output gates along with the squashing operation be a worthwhile supply of data.

Long run brief time period reminiscence

Long run brief time period reminiscence

The output of an LSTM cell is obtained by a dense layer. Within the output stage, a softmax activation perform is utilized after the dense layer.

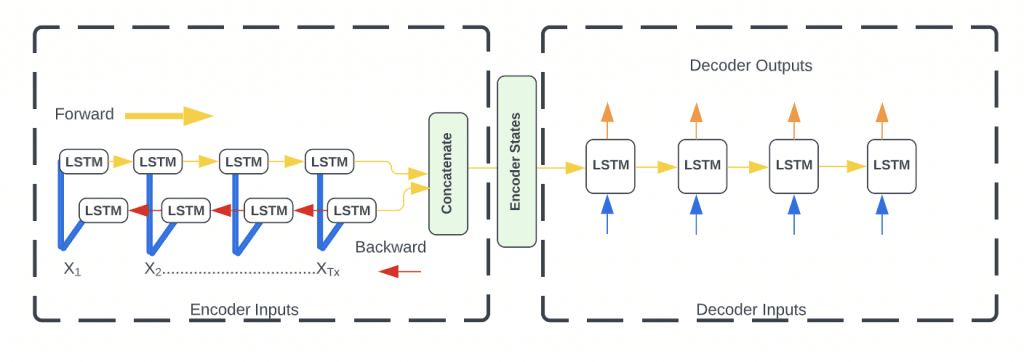

Bidirectional LSTMs

Bidirectional LSTMs are an usually mentioned enhancement on LSTMs. A coaching sequence is offered forwards and backwards to 2 impartial recurrent neural networks, each of that are coupled to a single output layer in Bidirectional Recurrent Neural Networks (BRNNs). Consequently, BRNN is ready to present complete, sequential information about every of the factors earlier than and after every level in a specific sequence. Equally, there is no such thing as a have to specify a particular (task-dependent) time window or objective delay for the reason that web is free to make use of as a lot or as little of this context because it wants.

BLSTM Encoder <> LSTM Decoder

BLSTM Encoder <> LSTM Decoder

RNNs of the standard sort have the drawback of solely having the ability to reap the benefits of the earlier contexts. A bidirectional RNN (BRNN) accomplishes this by processing the info in each instructions utilizing two hidden layers that feed-forward info to the identical output layer in each instructions. By combining BRNN and LSTM, you get a bidirectional LSTM that means that you can entry long-range context from each enter instructions.

Functions of LSTM

LSTM in Pure Language Processing and Textual content Technology

Lengthy Brief-Time period Reminiscence (LSTM) networks have had a major affect within the discipline of Pure Language Processing (NLP) and Textual content Technology. LSTMs are a sort of recurrent neural community (RNN) that may study and bear in mind over lengthy sequences, successfully capturing the temporal dynamics of sequences and the context in textual content knowledge. They excel in duties that contain sequential knowledge with long-term dependencies, like translation of a chunk of textual content from one language to a different or producing a sentence that follows the grammatical and semantic guidelines of a language.

A key attribute of LSTMs that make them suited to these duties is their distinctive cell state construction, which might keep info in reminiscence for prolonged durations. This construction mitigates the vanishing gradient drawback, a problem in conventional RNNs, the place the community struggles to backpropagate errors by way of time and layers, notably over many time steps. For instance, when processing a prolonged sentence, conventional RNNs would possibly lose the context of the preliminary phrases by the point they attain the tip, however LSTMs keep this info, proving essential for understanding the semantic that means of the sentence as a complete.

In Textual content Technology, LSTMs could be skilled on a big corpus of textual content the place they study the likelihood distribution of the subsequent character or phrase primarily based on a sequence of earlier characters or phrases. As soon as skilled, they will generate new textual content that’s stylistically and syntactically just like the enter textual content. This capability of LSTMs has been utilized in a variety of functions, together with automated story technology, chatbots, and even for scripting complete film scripts. The spectacular efficiency of LSTM fashions in these domains is a testomony to their prowess in dealing with pure language and textual content technology duties.

Making use of LSTM for Time Sequence Prediction

Lengthy Brief-Time period Reminiscence (LSTM) networks are more and more getting used within the discipline of time sequence prediction, owing to their capability to study long-term dependencies, which is a standard attribute of time sequence knowledge. Time sequence prediction includes forecasting future values of a sequence, corresponding to inventory costs or climate circumstances, primarily based on historic knowledge. LSTMs, with their distinctive reminiscence cell construction, are well-equipped to keep up temporal dependencies in such knowledge, successfully understanding patterns over time.

The LSTM community construction, with its distinctive gating mechanisms – the overlook, enter, and output gates – permits the mannequin to selectively bear in mind or overlook info. When utilized to time sequence prediction, this permits the community to present extra weight to current occasions whereas discarding irrelevant historic knowledge. This selective reminiscence makes LSTMs notably efficient in contexts the place there’s a vital quantity of noise, or when the essential occasions are sparsely distributed in time. As an illustration, in inventory market prediction, LSTMs may deal with current market traits and ignore older, much less related knowledge.

LSTMs even have an edge over conventional time sequence fashions corresponding to ARIMA or exponential smoothing, as they will mannequin advanced nonlinear relationships and robotically study temporal dependencies from the info. As well as, they will course of a number of parallel time sequence (multivariate time sequence), a standard incidence in real-world knowledge. The flexibility to deal with such complexity has led to widespread utility of LSTMs in monetary forecasting, demand prediction in provide chain administration, and even in predicting the unfold of illnesses in epidemiology. In every of those fields, the ability of LSTM fashions to make correct, dependable forecasts from advanced time sequence knowledge is being harnessed.

LSTM in Voice Recognition Techniques

Lengthy Brief-Time period Reminiscence (LSTM) networks have caused vital developments in voice recognition techniques, primarily as a result of their proficiency in processing sequential knowledge and dealing with long-term dependencies. Voice recognition includes reworking spoken language into written textual content, which inherently requires the understanding of sequences – on this case, the sequence of spoken phrases. LSTM’s distinctive capability to recollect previous info for prolonged durations makes it notably suited to such duties, contributing to improved accuracy and reliability in voice recognition techniques.

The LSTM mannequin makes use of a sequence of gates to manage the circulate of data out and in of every cell, which makes it attainable to keep up essential context whereas filtering out noise or irrelevant particulars. That is essential for voice recognition duties the place the context or that means of phrases is usually reliant on earlier phrases or phrases spoken. As an illustration, in a voice-activated digital assistant, LSTM’s capability to retain long-term dependencies will help precisely seize consumer instructions, even once they’re spoken in lengthy, advanced sentences.

LSTMs are much less vulnerable to the vanishing gradient drawback in comparison with conventional Recurrent Neural Networks (RNNs), permitting them to study from extra prolonged sequences of information, which is usually the case in speech. Consequently, LSTMs have been extensively utilized in main speech recognition techniques, corresponding to Apple’s Siri, Google Assistant, and Amazon Alexa. By leveraging the distinctive capabilities of LSTM, these techniques can course of pure language extra successfully, leading to extra correct transcription and improved consumer experiences.

Use of LSTM in Music Composition and Technology

Lengthy Brief-Time period Reminiscence (LSTM) networks have revolutionized the sphere of music composition and technology by offering the potential to generate unique, inventive musical items. Music, basically, is sequential knowledge, with every word or chord bearing relevance to its predecessors and successors. The inherent property of LSTMs to keep up and manipulate sequential knowledge makes them a becoming selection for music technology duties. They will successfully seize and reproduce the construction of music, considering not simply the notes, but additionally their timing, length, and depth.

When skilled on a dataset of music, an LSTM community can study the patterns and dependencies that outline the musical type of that dataset. As an illustration, when skilled on a set of classical piano items, the community may study the attribute harmonies, chord progressions, and melodies of that type. It could then generate new compositions that observe comparable patterns, basically “composing” new music within the type of the coaching knowledge. The LSTM’s capability to seize long-term dependencies ensures that the generated music items keep coherence and musicality over a protracted length.

LSTMs are additionally utilized in interactive music techniques, permitting customers to create music collaboratively with the AI. They will generate continuations of a musical piece given by the consumer, and even adapt the generated music primarily based on consumer suggestions. LSTMs’ use in music technology not solely opens up new avenues for creativity but additionally gives a worthwhile device for learning and understanding music idea and composition.

LSTM for Video Processing and Exercise Recognition

Lengthy Brief-Time period Reminiscence (LSTM) networks have confirmed to be extremely efficient in video processing and exercise recognition duties. Video knowledge is inherently sequential and temporal, with every body of a video being associated to the frames earlier than and after it. This property aligns with LSTM’s functionality to deal with sequences and bear in mind previous info, making them splendid for these duties. LSTMs can study to establish and predict patterns in sequential knowledge over time, making them extremely helpful for recognizing actions inside movies the place temporal dependencies and sequence order are essential.

In video processing duties, LSTM networks are usually mixed with convolutional neural networks (CNNs). CNNs are used to extract spatial options from every body, whereas LSTMs deal with the temporal dimension, studying the sequence of actions over time. For instance, in an exercise recognition activity, the CNN may extract options like shapes and textures from particular person frames, whereas the LSTM would study the sequence and timing of those options to establish a particular exercise, corresponding to an individual working or a automotive driving. This mixed CNN-LSTM strategy could be very efficient for video processing duties, permitting for correct recognition of advanced actions that contain a sequence of actions over time.

LSTM has plenty of different well-known functions as nicely, together with:

- Robotic management

- Time sequence prediction

- Speech recognition

- Rhythm studying

- Music composition

- Handwriting recognition

- Human motion recognition

- Signal language translation

- Time sequence anomaly detection

- A number of prediction duties within the space of enterprise course of administration

- Prediction in medical care pathways

- Semantic parsing

- Object co-segmentation

- Airport passenger administration

- Brief-term visitors forecast

- Drug design

- Market Prediction

Conclusion

Lengthy Brief Time period Reminiscence (LSTM) networks are a sort of recurrent neural community able to studying order dependence in sequence prediction issues. A conduct required in advanced drawback domains like machine translation, speech recognition, and extra. LSTMs are a viable reply for issues involving sequences and time sequence. Issues in knowledge science could be resolved utilizing LSTM fashions.

Even easy fashions require a whole lot of time and system assets to coach because of the problem of coaching them. The {hardware} limitations are the one factor holding this again. Within the conventional RNN, the issue is that they’re solely in a position to make use of the contexts of the previous. These BRNNs (Bidirectional RNNs) are in a position to do that by processing knowledge in each instructions on the identical time.